In-Process Design

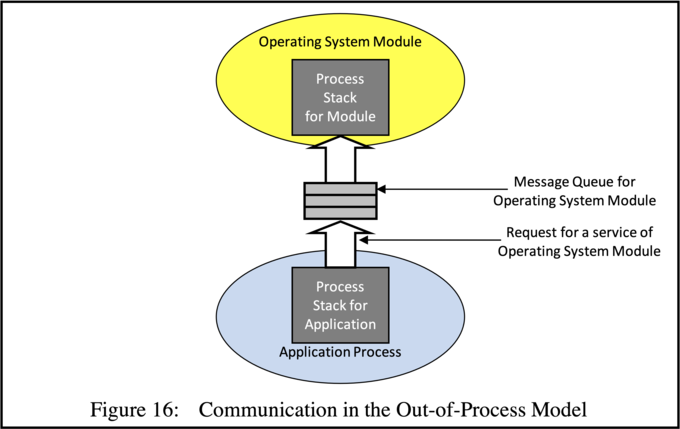

There are two models for decomposing an operating system into processes. The simplest involves having a separate process for carrying out each operating system activity. We refer to this kind of design as out-of-process, because operating system services are provided for an application out of the application's process, in a separate process. The technique is sometimes called message-oriented, because the application process must pass its parameters as a message from one process to another. This is illustrated in Figure 16.

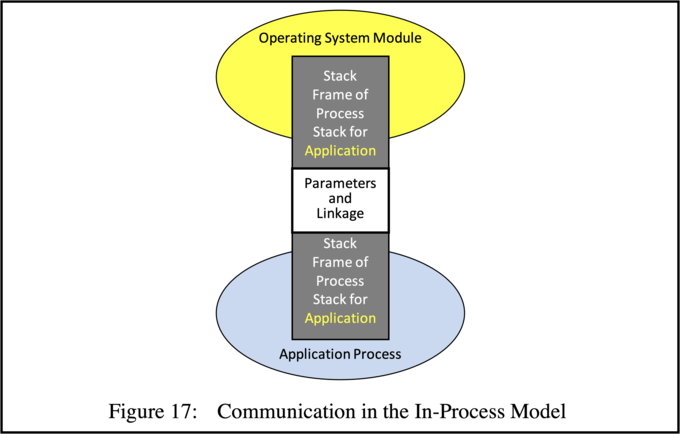

In the second model operating system services are provided in the process belonging to the application. We refer to this kind of design as in-process. It is sometimes also called procedure-oriented, because the operating system routine is implemented as a routine (see Figure 17), on the stack of the user requesting the operating system service.

In an interesting paper by Lauer and Needham [20] these two techniques were compared. The authors reached the conclusion that they were duals of each other. However, they overlooked two significant points, which are discussed in detail in my former student Kotigiri Ramamohanrao's PhD thesis [21]. First, as we shall see later, there are some fundamental differences when it comes to the issues of protection and security, which are discussed later. Second, the two models are not equivalent in terms of their dynamic properties, e.g. with respect to the level of parallelism attainable in each.

Since a process is a unit of execution it is easy to fall into the trap of thinking that the fewer the number of operating system processes, the less concurrent or parallel activity can be achieved in carrying our operating system tasks. In fact this is quite the opposite of the truth. In a system in which there is a process statically assigned to provide a particular service (i.e. the out-of-process model), this service must normally be used serially by different applications. This is because the server process examines its input message queue, selects a request, and then services it. When it has finished it selects another request, etc. Meanwhile all the application processes which have requested this service are usually blocked waiting for the service.

By contrast, in the in-process model no application processes are automatically blocked (also less work for the process scheduler!) since they can all concurrently call the same routine of the operating system and execute in parallel with each other, using their own process stacks. So there are fewer processes but at the same time there is more potential for parallelism.

This does not imply that it is always possible to achieve full parallelism in an in-process system. The difficulty comes when processes executing in the same module need to access the same data structure concurrently. If these processes are only reading the data there is no problem, but if they have to modify it, then the data structure could potentially become inconsistent, leading to wrong results. This issue is resolved in SPEEDOS using semaphores [22].

The important point here is that processes executing in the same operating system module (or user module, e.g. file module) in an in-process system do not always have to take turns to use the same module. First, not all data structures accessed by these processes are shared. Even if they are executing in the same routine, they may only need to access local data on their private stacks, which are not shared and therefore cause no problems. But even if they are accessing shared data they may not be modifying it but only reading it. So in practice more parallelism can be achieved in an in-process design.